airplanesandrockets | By far the most potent source of energy is gravity. Using it as power future aircraft will attain the speed of light.

Nuclear-powered aircraft are yet to be built, but there are research projects already under way that will make the super-planes obsolete before they are test-flown. For in the United States and Canada research centers, scientists, designers and engineers are perfecting a way to control gravity - a force infinitely more powerful than the mighty atom. The result of their labors will be anti-gravity engines working without fuel - weightless airliners and space ships able to travel at 170,000 miles per second.

If this seems too fantastic to be true, here is something to consider - the gravity research has been supported by Glenn L. Martin Aircraft Co., Convair, Bell Aircraft, Lear Inc., Sperry Gyroscope and several other American aircraft manufacturers who would not spend milli0ns of dollars on science fiction. Lawrence D. Bell, the famous builder of the rocket research planes, says, "We're already working with nuclear fuels and equipment to cancel out gravity." And William Lear, the autopilot wizard, is already figuring out "gravity control" for the weightless craft to come.

Gravitation - the mutual attraction of all matter, be it grains of sand or planets - has been the most mysterious phenomenon of nature. Isaac Newton and other great physicists discovered and described the gravitational law from which there has been no escape. "What goes up must come down," they said. The bigger the body the stronger the gravity attraction it has for other objects ... the larger the distance between the objects, the lesser the gravity pull. Defining those rigid rules was as far as science could go, but what caused gravity nobody knew, until Albert Einstein published his Theory of Relativity.

In formulating universal laws that would explain everything from molecules to stars, Einstein discovered a strong similarity between gravitation and magnetism. Magnets attract magnetic metals, of course, but they also attract and bend beams of electronic rays. For instance, in your television picture tube or electronic microscope, magnetic fields sway the electrons from their straight path. It was the common belief that gravitation of bodies attracted material objects only - then came Einstein's dramatic proof to the contrary.

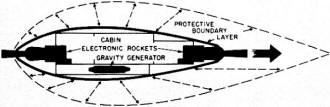

The G-plane licks "heat barrier" problem of high speed by creating its own gravity field. Gravity generator attracts surrounding air to form a thick boundary layer which travels with craft and dissipates heat. Electronic rockets provide forward and reverse thrust. Crew and passenger cabins are also within ship's own gravity field, thus making fast acceleration and deceleration safe for occupants.

Pre-Einstein physicists were convinced that light traveled along absolutely straight lines. But on May 29, 1919, during a full eclipse of the sun, Einstein proved that the light rays of distant stars were attracted and bent by the sun's gravitation. With the sun eclipsed, it was possible to observe the stars and measure the exact "bend" of their days as they passed close to the sun on their way to earth.

This discovery gave modem scientists a new hope. We already knew how to make magnets by coiling a wire around an iron core. Electric current running through the coiled wire created a magnetic field and it could be switched on and off at will. Perhaps we could do the same with the gravitation.

Einstein's famous formula E = mc2 - the secret of nuclear energy - opened the door to further research in gravitation. Prying into the atom's inner structure, nuclear scientists traced the gravity attraction to the atom's core - the nucleus. First they separated electrons by bombarding the atom with powerful electromagnetic "guns." Then, with even more powerful electromagnetic bombardment, the scientists were able to blast the nucleus. The "split" nucleus yielded a variety of heretofore unknown particles.

In the course of such experiments, Dr. Stanley Deser and Dr. Richard Arnowitt of Princeton Institute of Advanced Study found the gravity culprit - tiny particles responsible for gravitation. Without those G-(gravity) particles, an atom of, say, iron still behaved as any other iron atom except for one thing - it was weightless.

With the secret of gravitation exposed, the scientists now concentrate their efforts on harnessing the G-particles and their gravity pull. They are devising ways of controlling the gravity force just as the vast energy of a nuclear explosion has been put to work in a docile nuclear reactor for motive power and peaceful use. And once we have the control of those G-particles, the rest will be a matter of engineering.

According to the gravity research engineers, the G-engine will replace all other motors. Aircraft, automobiles, submarines, stationary powerplants - all will use the anti-gravity engines that will require little or no fuel and will be a mechanic's dream. A G-engine will have only one moving part - a rotor or a flywheel. One half of the rotor will be subjected to a de-gravitating apparatus, while the other will still be under the earth's gravity pull. With the G-particles neutralized, one half of the rotor will no longer be attracted by the earth's gravitation and will therefore go up as the other half is being pulled down, thus creating a powerful rotary movement.

Another, simpler idea comes from the Gravity. Research Foundation of New Boston, N. H. Instead of de-gravitating one half of the rotor, we would merely shield half of it with a gravity "absorber." The other half would still be pulled down and rotation would result (see sketch).

The anti-gravity engine rotor is partially shielded by the gravity absorber. The gravity force acting only on the exposed half of the rotor which creates a powerful rotary motion. This particular device is suitable for powering ground vehicles.

For an explanation of how the gravity "absorber" would work, lets turn to gravity's twin brother - magnetism. If you own an ordinary watch, you must be forever careful not to get it magnetized. Even holding a telephone receiver can magnetize the delicate balance wheel and throw the watch out of time. Therefore, an anti-magnetic watch is the thing to have. Inner works of such a watch are shielded by a soft iron casing which absorb the magnetic lines of force. Even in the strongest magnetic field, the shielded balance wheel is completely unaffected by the outside magnetic pull. In a similar manner, a gravity "absorber" would prevent the earth's gravity from acting upon the shielded portion of our G-engine.

Applied to engines, a gravity absorber would be a boon, but its true value would be in aircraft construction where the weight control engineers get ulcers trying to save an ounce here, a pound there. Of course, an indiscriminate shielding of an aircraft and the resulting total weightlessness is not what we would want. A de-gravitated aircraft would still be subject to the centrifugal force of our rotating globe. Freed from the gravity pull, a totally weightless aircraft would shoot off into space like sparks flying off a faster spinning, abrasive grinding wheel. So, the weight, or gravity, would have to be reduced gradually for take-off and climb. For level flight and for hovering, the weight would be maintained at some low level while landing would be accomplished by slowly restoring the craft's full weight.

The gravity-defying engineers claim that the problem of this lift control is a cinch. The shield would have an arrangement similar in principle to the venetian blind - open for no lift and closed for decreased weight and increased lift.

No longer dependent on wings or rotors, the G-craft would most likely be an ideal aerodynamic shape - a sort of slimmed-down version of the old-fashioned dirigible balloon. Since weight has a lot to do in limiting the size of today's aircraft, a perfect weight control of the G-craft would remove that barrier and would make possible airliners as big as the great ocean liner the S.S. United States.

A G-airliner would be a real speed demon. The coast-to-coast flight time would be cut to minutes even with the orthodox rocket propulsion. You may wonder about the air friction "heat barrier" of high-speed aircraft, but the gravity experts have an answer for that, too. Canadian scientists headed by Wilbur B. Smith - the director of the "Project Magnet" - visualize an apparatus producing a gravitational field in the G-ship. This gravity field would attract the surrounding air to form a thick "boundary layer" which would move with the ship. Thus, air friction would take place at a distance from the ship's structure and the friction heat would be dissipated before it could warm up the ship's skin (large diagram).

When electric current from battery is switched on the coil will create a magnetic field which repels the aluminum disk and makes it shoot upward. Future sips may be built of diamagnetic metals with specially rearranged atomic structure.

The G-ships own gravity field would perform another useful function. William P. Lear, the chairman of Lear, Inc., makers of autopilots and other electronic controls, points out, "All matter within the ship would be influenced by the ship's gravitation only. This way, no matter how fast you accelerated or changed course, your body would not feel it any more than it now feels the tremendous speed and acceleration of the earth." In other words, no more pilot blackouts or any such acceleration headaches. The G-ship could take off like a cannon shell, come to a stop with equal abruptness and the passengers wouldn't even need seat belts.

This ability to accelerate rapidly would be ideal for a space vehicle. Eugene M. Gluhareff, President of Gluhareff Helicopter and Airplane Corporation of Manhattan Beach, California, has already designed several space ships capable of travel at almost the speed of light, or about 600,000,000 miles per hour. At that speed. the round trip to Venus would take just over 30 minutes. Of course, ordinary chemical rockets would be inadequate for such speeds, but Gluhareff already figures on using "atomic rockets."

At least one such "atomic rocket" design has been worked out by Dr. Ernest Stuhlinger, a physicist of the U.S. Army Redstone Arsenal at Huntsville, Alabama. Dr. Stuhlinger's rocket would use ions - atoms with a positive electric charge. To produce those ions, Dr. Stuhlinger takes cesium, a rare metal that liquefies at 71° F. Blown across a platinum coil heated to 1000° F., liquid cesium is ionized, the ions are accelerated by a 10,000 volt electromagnetic "gun" and shot out of a tail pipe at a velocity of 186,324 miles per second.

The power for Dr. Stuhlinger's "ion rocket" would be supplied by an atomic reactor or by solar energy. The weight of the reactor and its size would no longer be a design problem, since the entire apparatus could be de-gravitated - made weightless. Revolutionary as Dr. Stuhlinger's idea may seem, it is already superseded by the Canadian physicists of the "Project Magnet." The Canadians propose to do away with the bulk of the nuclear reactor and use the existing magnetic fields of the earth and other planets for propulsion.

As we well know, two like magnetic poles repel each other, just as under certain conditions, an electromagnet repel the so-called diamagnetic metal, such as aluminum. Take a flat, aluminum ring, slip it over a strong electromagnet and switch on the current. Repelled by the magnetic field, the disk will fly off with quite a speed. (see sketch). Of course, the earth's magnetism is too weak to repel a huge G-ship made of a diamagnetic metal. However, the recent studies of the atomic nucleus and the discovery of G-particles make it possible to rearrange the atomic structure so as to greatly increase the diamagnetic properties of metals. Thus, a G-ship with a magnetic control could be repelled by the earth's magnetic field and it would travel along the magnetic lines of force like the aluminum ring shooting off the electromagnet.

The entire universe is covered by magnetic fields of stars and planets. Those fields intertwine in a complex pattern, but they are always there. By proper selection of those fields, we could navigate our G-ship in space as well as within the earth's magnetic field. And the use of the magnetic repulsion would eliminate the radiation danger of the nuclear reactor and the problem of atomic fuel.

How long will it take to build the weightless craft and G-engines, the gravity experts don't know. George S. Trimble, Vice-President in charge of the G-project at Martin Aircraft Corporation thinks the job "could be done in about the time it took to build the first atom bomb." And another anti-gravity pioneer, Dudley Clarke, President of Clarke Electronics Laboratories of Palm Springs, California, believes it will be a matter of a few yeas to manufacture anti-gravity "power packages."

But no matter how many years we have to wait, the amazing anti-gravity research is a reality. And the best guarantee of its early success is the backing of the U.S. aircraft industry - the engineers and technicians who have always given us tomorrow's craft today.

![{\displaystyle \Delta E_{G}=4\pi G\int d{\boldsymbol {r}}\int d{\boldsymbol {r}}'\,{\frac {[\mu _{a}({\boldsymbol {r}})-\mu _{b}({\boldsymbol {r}})][\mu _{a}({\boldsymbol {r}}')-\mu _{b}({\boldsymbol {r}}')]}{|{\boldsymbol {r}}-{\boldsymbol {r}}'|}}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6f85a8dcd12fb6366fbc1f03193f1828a6c52c8c)