Monday, April 17, 2017

Syria Sarin Gas Attack Staged

By

CNu

at

April 17, 2017

0

comments

![]()

Labels: necropolitics , not a good look , psychopathocracy , unspeakable

Trump Has Received and Will Obey his Marching Orders

By

CNu

at

April 17, 2017

0

comments

![]()

Sunday, April 16, 2017

Six Main Arcs in Human Storytelling Identified by Artificial Intelligence

That explanation comes from a lecture he gave, and which you can still watch on YouTube, that involves Vonnegut mapping the narrative arc of popular storylines along a simple graph. The X-axis represents the chronology of the story, from beginning to end, while the Y-axis represents the experience of the protagonist, on a spectrum of ill fortune to good fortune. “This is an exercise in relativity, really,” Vonnegut explains. “The shape of the curve is what matters.”

By

CNu

at

April 16, 2017

0

comments

![]()

Labels: AI , narrative , What IT DO Shawty...

Artificial Intelligence Will Disclose Cetacean Souls

By

CNu

at

April 16, 2017

0

comments

![]()

Labels: AI , computationalism , scientific mystery

Physical Basis for Morphogenesis: On Growth and Form

By

CNu

at

April 16, 2017

0

comments

![]()

Labels: computationalism , evolution , scientific mystery , What IT DO Shawty...

Saturday, April 15, 2017

2017 Website@Most Important Lab at Harvard and Arguably the World?!?!

| Harvard Molecular Technologies | Contact | Calendar | Courses | G.Church | Lab photos & List | News | Publications |

|

|---|

Journals: MIT, Harvard, Science , Pubmed History of this web page.

-->

By

CNu

at

April 15, 2017

0

comments

![]()

Labels: Ass Clownery , comedy gold , FAIL

Hacking and Reprogramming Cells Like Computers

By

CNu

at

April 15, 2017

0

comments

![]()

Labels: computationalism , Genetic Omni Determinism GOD

A Programming Language For Living Cells?

By

CNu

at

April 15, 2017

0

comments

![]()

Labels: computationalism , Genetic Omni Determinism GOD

Friday, April 14, 2017

Open Thread: More Human Than Human

Brain item -- AI processing problem...??

would require AI to have the listener's entire life history stored in its memory to determine proper context....??

Your brain fills gaps in your hearing without you realising

...Here's a no-nonsense AI item: Turns out AI is not sufficiently stupid to allow PC liberals to shove ridiculous egalitarian concepts down its throat. AI just looks at the *FACTS* and calls it like it sees it....

Machine learning algorithms are picking up deeply ingrained race and gender prejudices concealed within the patterns of language use, scientists say

By

CNu

at

April 14, 2017

0

comments

![]()

Labels: AI , big don special , Open Thread , school

Thursday, April 13, 2017

The Dark Secret at the Heart of Artificial Intelligence

By

CNu

at

April 13, 2017

0

comments

![]()

Labels: AI

Is Artificial Intelligence a Threat to Christianity?

By

CNu

at

April 13, 2017

0

comments

![]()

Labels: AI

Will Artificial Intelligence Redefine Human Intelligence?

By

CNu

at

April 13, 2017

0

comments

![]()

Labels: AI

Wednesday, April 12, 2017

Why is the CIA WaPo Giving Space to Assange to Make His Case?

By

CNu

at

April 12, 2017

0

comments

![]()

Labels: information anarchy , wikileaks wednesday

The Blockchain and Us

A digital ledger in which transactions made in bitcoin or another cryptocurrency are recorded chronologically and publicly.

From en.oxforddictionaries.com/definition/blockchain

By

CNu

at

April 12, 2017

0

comments

![]()

Labels: tactical evolution , wikileaks wednesday

The Content Of Sci-Hub And Its Usage

By

CNu

at

April 12, 2017

0

comments

![]()

Labels: scientific morality , wikileaks wednesday

ISP Data Pollution: Hiding the Needle in a Pile of Needles?

By

CNu

at

April 12, 2017

0

comments

![]()

Labels: Ass Clownery , FAIL , wikileaks wednesday

Tuesday, April 11, 2017

Chasing Perpetual Motion in the Gig Economy

By

CNu

at

April 11, 2017

0

comments

![]()

Labels: Collapse Casualties , hustle-hard , musical chairs , niggerization

Student Debt Bubble Ruins Lives While Sucking Life Out of the Economy

While the headline consumer price index is 2.7 per cent, between 2016 and 2017 published tuition and fee prices rose by 9 per cent at four-year state institutions, and 13 per cent at posher private colleges.

A large chunk of the hike was due to schools hiring more administrators (who “brand build” and recruit wealthy donors) and building expensive facilities designed to lure wealthier, full-fee-paying students. This not only leads to excess borrowing on the part of universities — a number of them are caught up in dicey bond deals like the sort that sunk the city of Detroit — but higher tuition for students.

BazHurl

After a career in equities, having graduated the Dreamy Spires with significant not silly debt, I had the pleasure of interviewing lots of the best and brightest graduates from European and US universities. Finance was attracting far more than its deserved share of the intellectual pie in the 90’s and Noughties in particular; so at times it was distressing to meet outrageously talented young men and women wanting to genuflect at the altar of the $, instead of building the Flux Capacitor. But the greater take-away was how mediocre and homogenous most of the grads were becoming. It seemed the longer they had studied and deferred entry into the Great Unwashed, the more difficult it was to get anything original or genuine from them. Piles and piles of CV’s of the same guys and gals: straight A’s since emerging into the world, polyglots, founders of every financial and charitable university society you could dream up … but could they honestly answer a simple question like “Fidelity or Blackrock – Who has robbed widows and orphans of more?”. Hardly. In short, few of them qualified as the sort of person you would willingly invite to sit next to you for fifteen hours a day, doing battle with pesky clients and triumphing over greedy competitors. All these once-promising 22 to 24 year old’s had somehow been hard-wired by the same robot and worse, all were entitled. Probably fair enough as they had excelled at everything that had been asked of them up until meeting my colleagues and I on the trading floors. Contrast this to the very different experience of meeting visiting sixth formers from a variety of secondary schools that used to tour the bank and with some gentle prodding, light up the Q&A sessions at tour’s end, fizzing with enthusiasm and desire. Now THESE kids I would hire ahead of the blue-chipped grads, most days. They were raw material that could be worked with and shaped into weapons. It was patently clear that University was no longer adding the expected value to these candidates and in fact was becoming quite the reverse.

By

CNu

at

April 11, 2017

0

comments

![]()

Labels: debt slavery , Livestock Management , parasitic , reality casualties

Navient: Student Loans Designed to Fail

By

CNu

at

April 11, 2017

0

comments

![]()

Labels: banksterism , Collapse Crime , debt slavery

Monday, April 10, 2017

Jeff Sessions Will Reinstate the War on Black Men Drugs

By

CNu

at

April 10, 2017

0

comments

![]()

Labels: not-seeism , professional and managerial frauds , psychopathocracy , Rule of Law , Small Minority , Toxic Culture? , What IT DO Shawty...

Chipocalypse Now - I Love The Smell Of Deportations In The Morning

sky | Donald Trump has signalled his intention to send troops to Chicago to ramp up the deportation of illegal immigrants - by posting a...

-

theatlantic | The Ku Klux Klan, Ronald Reagan, and, for most of its history, the NRA all worked to control guns. The Founding Fathers...

-

NYTimes | The United States attorney in Manhattan is merging the two units in his office that prosecute terrorism and international narcot...

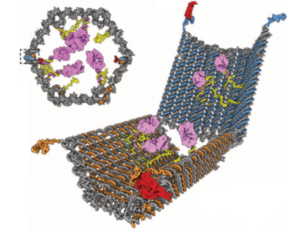

-

Wired Magazine sez - Biologists on the Verge of Creating New Form of Life ; What most researchers agree on is that the very first functionin...