Tuesday, March 27, 2018

With "Platform" Capitalism - Value Creation Depends on Privacy Invasion

By

CNu

at

March 27, 2018

0

comments

![]()

Labels: facebook IS evil , governance , Livestock Management , neofeudalism , shameless , TIA , Toxic Culture? , transbiological , tricknology

Saturday, February 17, 2018

Future Genomics: Don't Edit A Rough Copy When You Can Print A Fresh New One

By

CNu

at

February 17, 2018

0

comments

![]()

Labels: evolution , Exponential Upside , Possibilities , transbiological , What Now?

Watch The Edge Video, It's About The "Church Approach" To Global Warming

By

CNu

at

February 17, 2018

0

comments

![]()

Labels: Exponential Upside , transbiological , What Now?

Digital Biological Conversion

By

CNu

at

February 17, 2018

0

comments

![]()

Labels: Exponential Upside , transbiological , What Now?

Wednesday, January 10, 2018

Money As Tool, Money As Drug: The Biological Psychology of a Strong Incentive

By

CNu

at

January 10, 2018

0

comments

![]()

Labels: addiction , banksterism , debt slavery , dopamine , hegemony , human experimentation , hypnosis , transbiological , tricknology , What IT DO Shawty...

Monday, January 01, 2018

MindSmash Pipes Up Into The Digital Catheter...,

By

CNu

at

January 01, 2018

0

comments

![]()

Labels: as above-so below , Breakaway Civilization , Ecce Homo , gain of function , human experimentation , Intimate Droning , niggerization , nootropism , transbiological , What Now?

Is Ideology The Original Augmented Reality?

By

CNu

at

January 01, 2018

0

comments

![]()

Labels: Exponential Upside , human experimentation , Intimate Droning , Minority Report , neuromancy , nootropism , transbiological

Thursday, December 07, 2017

3-D Printed, WiFi Connected, No Electronics...,

By

CNu

at

December 07, 2017

0

comments

![]()

Labels: Exponential Upside , Noo/Nano/Geno/Thermo , transbiological , tricknology , unintended consequences , What Now?

3-D Printed Living Tattoos

By

CNu

at

December 07, 2017

0

comments

![]()

Labels: Exponential Upside , Noo/Nano/Geno/Thermo , transbiological , tricknology , What Now?

Sunday, October 01, 2017

Quantum Criticality in Living Systems

By

CNu

at

October 01, 2017

0

comments

![]()

Labels: AI , fractal unfolding , gain of function , Genetic Omni Determinism GOD , quantum , transbiological , What IT DO Shawty...

What is Life?

By

CNu

at

October 01, 2017

0

comments

![]()

Labels: AI , evolution , Genetic Omni Determinism GOD , transbiological , What IT DO Shawty...

Thursday, September 28, 2017

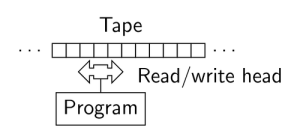

Trans-Turing Machines

Turing Machine = Foundation of Computer Science

58"Further disclosed herein is a Trans-Turing machine that includes a plurality of nodes, each node comprising at least one quantum degree of freedom that is coupled to at least one quantum degree of freedom in another node and at least one classical degree of freedom that is coupled to at least one classical degree of freedom in another node, wherein the nodes are configured such that the quantum degrees of freedom decohere to classicity and thereby alter the classical degrees of freedom, which then alter the decoherence rate of remaining quantum degrees of freedom; at least one input signal generator configured to produce an input signal that recoheres classical degrees of freedom to quantum degrees of freedom; and a detector configured to receive quantum or classical output signals from the nodes."

By

CNu

at

September 28, 2017

0

comments

![]()

Labels: as above-so below , azoth , Exponential Upside , transbiological , What IT DO Shawty...

Monday, September 18, 2017

The Promise and Peril of Immersive Technologies

Have you read?

By

CNu

at

September 18, 2017

0

comments

![]()

Labels: Breakaway Civilization , change , co-evolution , cognitive error , cognitive infiltration , complications , neuromancy , transbiological , unintended consequences

Virtual Reality Health Risks...,

By

CNu

at

September 18, 2017

0

comments

![]()

Labels: Breakaway Civilization , change , co-evolution , cognitive error , cognitive infiltration , complications , neuromancy , transbiological , unintended consequences

Sunday, September 17, 2017

Artificial Intelligence is Lesbian

By

CNu

at

September 17, 2017

0

comments

![]()

Labels: AI , common sense , Ecce Homo , transbiological

Thursday, August 31, 2017

Toward Ubiquitous Robotic Organisms

By

CNu

at

August 31, 2017

0

comments

![]()

Labels: AI , Exponential Upside , transbiological , What Now?

IoT Extended Sensoria

By

CNu

at

August 31, 2017

0

comments

![]()

Labels: Exponential Upside , Minority Report , Noo/Nano/Geno/Thermo , transbiological , What Now?

Wednesday, August 30, 2017

The Weaponization of Artificial Intelligence

perception, conversation, decisionmaking).

Advances in AI are making it possible to cede to machines many tasks long regarded as impossible for machines to perform. Intelligent systems aim to apply AI to a particular problem or domain—the

implication being that the system is programmed or trained to operate within the bounds of a defined knowledge base. Autonomous function is at a system level rather than a component level. The study considered two categories of intelligent systems: those employing autonomy at rest and those employing autonomy in motion. In broad terms, systems incorporating autonomy at rest operate virtually, in software, and include planning and expert advisory systems, whereas systems incorporating autonomy in motion have a presence in the physical world and include robotics and autonomous vehicles.

One of the less well-known ways that autonomy is changing the world is in applications that include data compilation, data analysis, web search, recommendation engines, and forecasting. Given the limitations of human abilities to rapidly process the vast amounts of data available today, autonomous systems are now required to find trends and analyze patterns. There is no need to solve the long-term AI problem of general intelligence in order to build high-value applications that exploit limited-scope autonomous capabilities dedicated to specific purposes. DoD’s nascent Memex program is one of many examples in this category.3

Rapid global market expansion for robotics and other intelligent systems to address consumer and industrial applications is stimulating increasing commercial investment and delivering a diverse array of products. At the same time, autonomy is being embedded in a growing array of software systems to enhance speed and consistency of decision-making, among other benefits. Likewise, governmental entities, motivated by economic development opportunities in addition to security missions and other public sector applications, are investing in related basic and applied research.

Applications include commercial endeavors, such as IBM’s Watson, the use of robotics in ports and

mines worldwide, autonomous vehicles (from autopilot drones to self-driving cars), automated logistics and supply chain management, and many more. Japanese and U.S. companies invested more than $2 billion in autonomous systems in 2014, led by Apple, Facebook, Google, Hitachi, IBM, Intel, LinkedIn, NEC, Yahoo, and Twitter. 4

A vibrant startup ecosystem is spawning advances in response to commercial market opportunities; innovations are occurring globally, as illustrated in Figure 2 (top). Startups are targeting opportunities that drive advances in critical underlying technologies. As illustrated in Figure 2 (bottom), machine learning—both application-specific and general purpose—is of high interest. The market-pull for machine learning stems from a diverse array of applications across an equally diverse spectrum of industries, as illustrated in Figure 3.

By

CNu

at

August 30, 2017

0

comments

![]()

Labels: AI , Noo/Nano/Geno/Thermo , transbiological , What Now? , wikileaks wednesday

Friday, August 04, 2017

The Search for Extraterrestrial Life and Post-Biological Intelligence

By

CNu

at

August 04, 2017

0

comments

![]()

Labels: AI , Possibilities , transbiological , visitors?

What Do You Think About Machines That Think?

We have numerous problems to tackle and find solutions. [...] We need more artist programmers and artistic programming. It is time for our mind machines to grow out of a youthful period that has lasted sixty years. (Thomas A. Bass, p. 552)

Should not we ask the question as to what thinkers might think about? Will they want and expect citizens' rights? Will they have consciousness? What kind of government would choose an AI for us? What kind of society would they want to structure for themselves? Or is "their" society "our" society? Will we and the AIs include each other in our respective circles of empathy?

By

CNu

at

August 04, 2017

0

comments

![]()

Labels: AI , Possibilities , transbiological

I Don't See Taking Sides In This Intra-tribal Skirmish....,

Jessica Seinfeld, wife of Jerry Seinfeld, just donated $5,000 (more than anyone else) to the GoFundMe of the pro-Israel UCLA rally. At this ...

-

theatlantic | The Ku Klux Klan, Ronald Reagan, and, for most of its history, the NRA all worked to control guns. The Founding Fathers...

-

Video - John Marco Allegro in an interview with Van Kooten & De Bie. TSMATC | Describing the growth of the mushroom ( boletos), P...

-

Farmer Scrub | We've just completed one full year of weighing and recording everything we harvest from the yard. I've uploaded a s...