tandfonline | In portraiture,

subjects are mostly depicted with a greater portion of the left side of

their face (left hemiface) facing the viewer. This bias may be induced

by the right hemisphere's dominance for emotional expression and agency.

Since negative emotions are particularly portrayed by the left

hemiface, and since asymmetrical hemispheric activation may induce

alterations of spatial attention and action-intention, we posited that

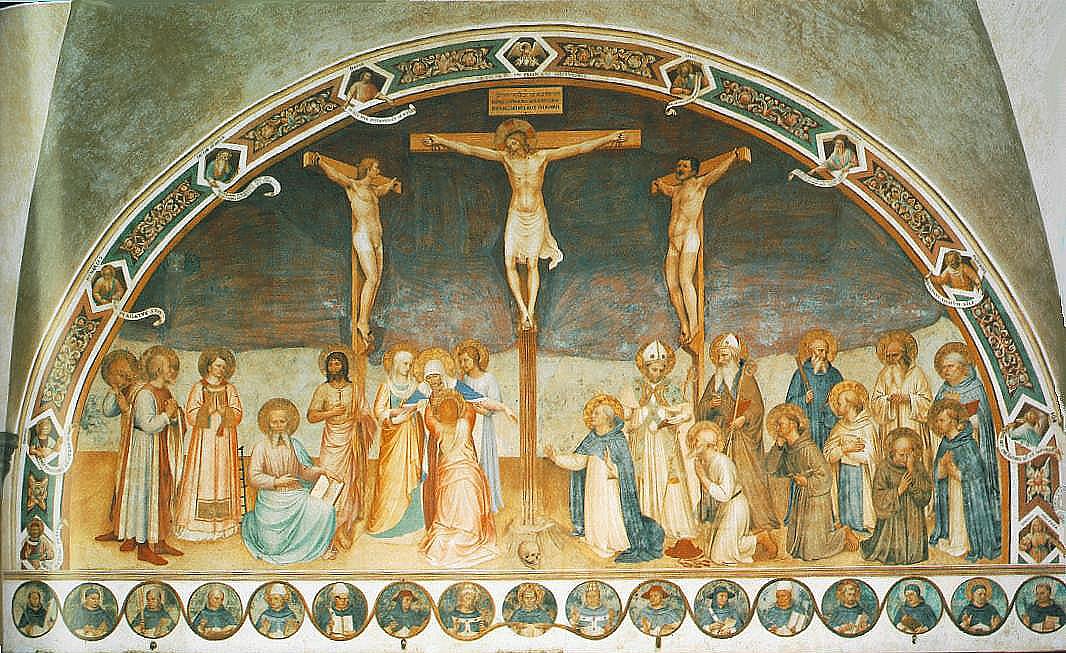

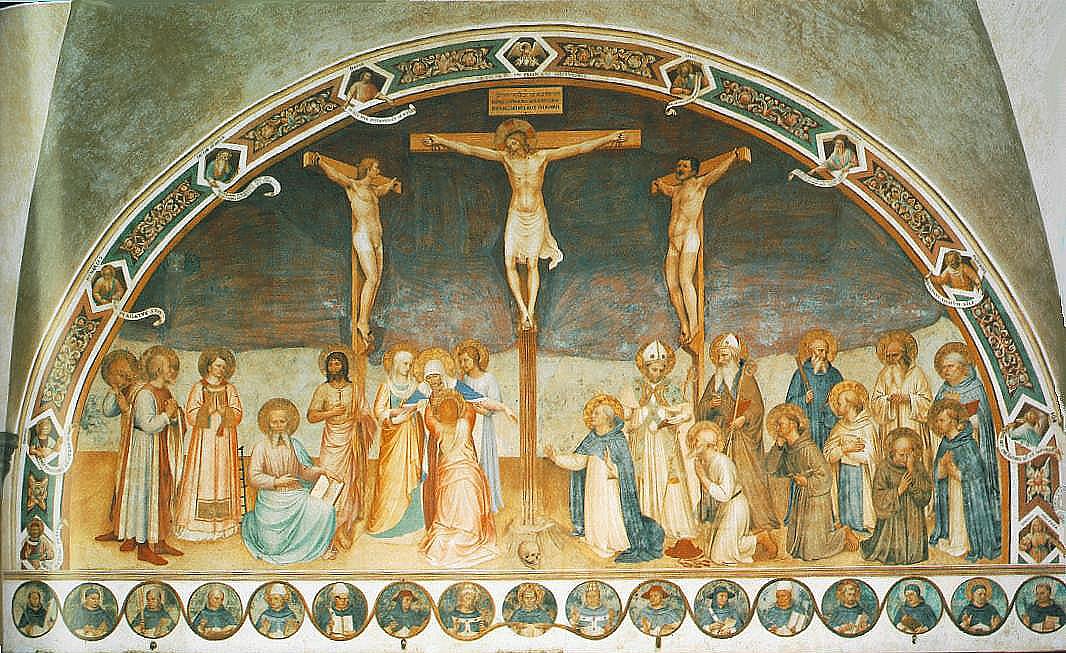

paintings of the painful and cruel crucifixion of Jesus would be more

likely to show his left hemiface than observed in portraits of other

people. By analyzing depictions of Jesus's crucifixion from book and art

gallery sources, we determined a significantly greater percent of these

crucifixion pictures showed the left hemiface of Jesus facing the

viewer than found in other portraits. In addition to the facial

expression and hemispatial attention-intention hypotheses, there are

other biblical explanations that may account for this strong bias, and

these alternatives will have to be explored in future research.

In

portraits, most subjects are depicted with their head rotated

rightward, with more of the left than right side of the subject's face

being shown. For example, in the largest study of facial portraiture,

McManus and Humphrey (

1973)

studied 1474 portraits and found a 60% bias to portray a greater

portion of the subjects’ left than right hemiface. Nicholls, Clode,

Wood, and Wood (

1999) found the same left hemiface bias even when accounting for the handedness of the painter.

Multiple

theories have been proposed in an attempt to explain the genesis of

this left hemiface bias in portraits. One hypothesis is that the right

hemisphere is dominant for mediating facial emotional expressions. In an

initial study, Buck and Duffy (

1980)

reported that patients with right hemisphere damage were less capable

of facially expressing emotions than those with left hemisphere damage

when viewing slides of familiar people, unpleasant scenes, and unusual

pictures. These right-left hemispheric differences in facial

expressiveness have been replicated in studies involving the spontaneous

and voluntary expression of emotions in stroke patients with focal

lesions (Borod, Kent, Koff, Martin, & Alpert,

1988; Borod, Koff, Lorch, & Nicholas,

1985; Borod, Koff, Perlman Lorch, & Nicholas,

1986; Richardson, Bowers, Bauer, Heilman, & Leonard,

2000).

Hemispheric

asymmetries are even reported in more “naturalistic” settings outside

the laboratory. For example, Blonder, Burns, Bowers, Moore, and Heilman (

1993)

videotaped interviews with patients and spouses in their homes and

found that patients with right hemisphere damage were rated as less

facially expressive than left hemisphere-damaged patients and normal

control patients. These lesion studies suggest that the right hemisphere

has a dominant role in mediating emotional facial expressions. Whereas

corticobulbar fibers that innervate the forehead are bilateral, the

contralateral hemisphere primarily controls the lower face. Thus, these

lesion studies suggest that the left hemiface below the forehead, which

is innervated by the right hemisphere, may be more emotionally

expressive.

This right hemisphere-left

hemiface dominance postulate has been further supported by studies of

normal subjects portraying emotional facial expressions. For example,

Borod et al. (

1988)

asked subjects to portray emotions, either by verbal command or visual

imitation. The judges who rated these facial expressions ranked the left

face as expressing stronger emotions. Sackeim and Gur (

1978)

showed normal subjects photographs of normal people facially expressing

their emotions and asked participants to rate the intensity of the

emotion being expressed. However, before showing these pictures of

people making emotional faces, Sackeim and Gur altered the photographs.

They either paired the left hemiface with a mirror image of this

photograph's left hemiface to form a full face made up of two left

hemifaces or formed full faces from right hemifaces. Normal participants

found that the composite photographs of the left hemiface were more

emotionally expressive than the right hemiface. Triggs, Ghacibeh,

Springer, and Bowers (

2005)

administered transcranial magnetic stimulation (TMS) to the motor

cortex of 50 subjects during contraction of bilateral orbicularis oris

muscles and analyzed motor evoked potentials (MEPs). They found that the

MEPs elicited in the left lower face were larger than the right face,

and thus the left face might appear to be more emotionally expressive

because it is more richly innervated.

Another

reason portraits often have the subjects rotated to the right may be

related to the organization of the viewer's brain. Both lesion studies

(e.g., Adolphs, Damasio, Tranel, & Damasio,

1996; Bowers, Bauer, Coslett, & Heilman,

1985; DeKosky, Heilman, Bowers, & Valenstein,

1980) and physiological and functional imaging studies (e.g., Davidson & Fox,

1982; Puce, Allison, Asgari, Gore, & McCarthy,

1996; Sergent, Ohta, & Macdonald,

1992)

have revealed that the right hemisphere is dominant for the recognition

of emotional facial expressions and the recognition of previously

viewed faces (Hilliard,

1973; Jones,

1979).

In addition, studies of facial recognition and the recognition of

facial emotional expressions have demonstrated that facial pictures

shown in the left visual field and left hemispace are better recognized

than those viewed on the right (Conesa, Brunold-Conesa, & Miron,

1995).

Since the right hemisphere is dominant for facial recognition and the

perception of facial emotions when viewing faces, the normal viewer of

portraits may attend more to the left than right visual hemispace and

hemifield. When the head of a portrait is turned to the right and the

observer focuses on the middle of the face (midsagittal plane), more of

the subject's face would fall in the viewer's left visual hemispace and

thus be more likely to project to the right hemisphere.

Agency

is another concept that may influence the direction of facial deviation

in portraiture. Chatterjee, Maher, Gonzalez Rothi, and Heilman (

1995)

demonstrated that when right-handed individuals view a scene with more

than one figure, they are more likely to see the left figure as being

the active agent and the right figure as being the recipient of action

or the patient. From this perspective the artist is the agent, and

perhaps he or she is more likely to paint the left hemiface of the

subject, which from the artist's perspective is more to the right, the

position of the patient. Support for this agency hypothesis comes from

studies in which individuals rated traits of left- versus right-profiled

patients, and found that those with the right cheek exposed were

considered more “active” (Chatterjee,

2002).

Taking

this background information into account and applying it to depictions

of the crucifixion of Jesus Christ highlights the various influences on

profile painting in portraiture. Specifically, we confirm the

predilection to display the left hemiface in portraiture and predict the

same in portraits of Jesus’ crucifixion.

The

strongest artistic portrayals of a patient being subject to cruel and

painful agents are images of the crucifixion of Jesus. The earliest

depiction of Christ on the cross dates back to around 420 AD. As

Christianity existed for several centuries before that, this seems to be

a late onset for this type of art. Because of the strong focus on

Christ's resurrection and the disgrace of his agony and death, art

historians postulate that there was a hesitation for early followers to

show Christ on the cross. The legalization of Christianity also may have

lifted the stigma. Based on the artwork still in existence from that

period, Jesus was often pictured alive during the crucifixion scene.

Several centuries later, from the end of the seventh century to the

beginning of the eighth century, Christ is more often shown dead on the

cross (Harries,

2005).

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()