engineering | Generative design, along with its closely allied technology, topology optimization, is a technology that has overpromised and under-delivered. A parade of parts from generative design providers is dismissed outright as unmanufacturable, impractical—or just goofy looking. Their one saving grace may be that the odd-looking parts save considerable weight compared to parts that engineers have designed but which cannot overcome the fact that they can only be 3D printed, or that their shape is optimized for one load case—and ignores all others. So many stringy “optimized” shapes can be a compressive load that would buckle the part. We could never put that stringy, strange shape in a car, plane or consumer product. We don’t want to be laughed at.

The design software industry, eager to push technology with such potential, acquired at great cost, sees the rejection of generative design as evidence of engineers who are stuck in their ways, content to work with familiar but outdated tools, in the dark and unable to see the light and realize the potential of a game-changing technology. Engineers, on the other hand, say they never asked for generative design—at least not in so many words.

Like 3D printing, another technology desperate for engineering acceptance, generative design sees its “solutions” as perfect. One such solution was a generatively designed bracket. The odd-looking part was discussed as a modeling experiment by Kevin Quinn, GM’s director of Additive Design and Manufacturing, but with no promise of mass production. It was obviously fragile and relied on 3D printing for its manufacture, making it unmanufacturable at the quantity required. It may have withstood crash test loads, but reverse loading would have splintered it. Yet, the part was to appear in every publication (even ours ) and almost everywhere lauded as a victory for generative design if the saint of lightweighting, a pressing automotive industry priority.

Now more than ever, engineers find themselves leaning into hurricane winds of technology and a software industry that promised us solutions. We are trained to accept technology, to bend it to our will, to improve products we design, but the insistence that software has found a solution to our design problems with generative design puts us in an awkward thanks-but-no-thanks position. We find ourselves in what Gartner refers to as “the trough of disillusionment.”

That is a shame for a technology that, if it were to work and evolve, could be the “aided” in computer- aided design. (For the sake of argument, let’s say that computer-aided design as it exists now is no more than an accurate way to represent a design that an engineer or designer has a fuzzy picture of in their heads).

How much trouble would it be to add some of what we know—our insight—to generative design? After all, that is another technology the software industry is fond of pushing. Watching a topology optimization take shape can be about as painful as watching a roomful of monkeys banging randomly on a keyboard and hoping to write a Shakespeare play. If, by some miracle, they form “What light through yonder window breaks?” our only hope of the right answer would be to type it ourselves. Similarly, an optimization routine starts creating a stringy shape. Bam! Let’s make it a cable and move on. A smooth shape is forming? Jump ahead and make it a flat surface. See a gap forming? Make it a machinable slot. Know a frame will undergo torsion? Stop the madness and use a round tube. (The shapes made with already optimized elements can still be optimized by adjusting angles and lengths.)

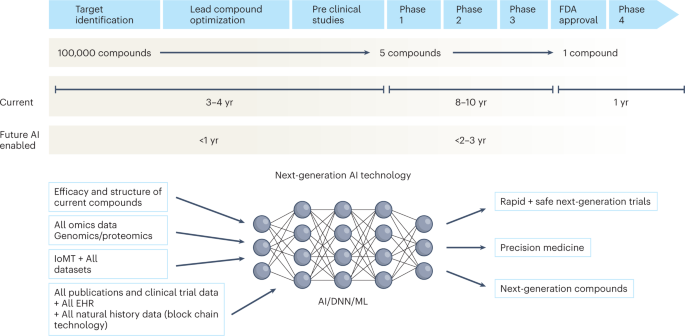

The inclusion of AI is what is strangely absent in generative design to this day. We are reminded of a recent conference (pre-pandemic, of course) in which we saw a software vendor go around a generative designed shape, replacing it bit by bit with standard shape elements—a round rod here, a smooth surface there. Really? We should have to do that?

Classical optimization techniques are a separate technology. Like CAD and CAE, they are based on mathematics. Unlike CAD, they have their own language. Optimization borrows language and nomenclature from calculus (optimum, dy/dx = 0, etc.) and adds some of its own. While optimization can be applied to any phenomenon, its application to 3D shapes is most relevant to this discussion. Each iteration of a shape is validated with a numerical technique. For structural shapes, the validation is done with finite element analysis (FEA). For fluid flow optimization, the validation is done with computational fluid dynamics (CFD). Therefore, the application of generative design uses the language of simulation, with terminology like boundary conditions, degrees of freedom, forces and moments. It’s a language foreign to designers and forgotten by the typical product design engineer that runs counter to the democratization of generative design.

The best technology is one that just works, requires little learning, and may not even need an introduction. Think of AI implementations by Google, delivered to our delight, with no fanfare—not even an announcement. Here was Google correcting our spelling, answering our questions, even completing our thoughts and translating languages. Scholars skilled in adapting works from one language to another were startled to find Google equally skilled. Google held no press conference, issued no press release, or even blogged about the wondrous feat of AI. It just worked. And it required no learning.

By contrast, IBM trumpeted its AI technology, Watson, after digesting the sum of human knowledge, easily beating Jeopardy! champion Ken Jennings. But when it came to health care, Watson bombed at the very task it was most heavily promoted for: helping doctors diagnose and cure cancer, according to the Wall Street Journal.

The point is quick success and acceptance will be had with technology that seamlessly integrates into how people already do things and provides delight and a happy surprise. As opposed to retraining, asking users to do things in a whole new way with a new, complicated application that requires them to learn a new language or terminology.